Saving the Caturday with a video proxy

Since at least 2018 I've been hooked on using iOS Shortcuts to post things to my site.

With IndieWeb building blocks like IndieAuth and Micropub, it's more or less possible to create Shortcuts do post any content you want to your personal site, mixing in images and video, and a lot more, all without needing third party apps running on your phone - or even in a browser!

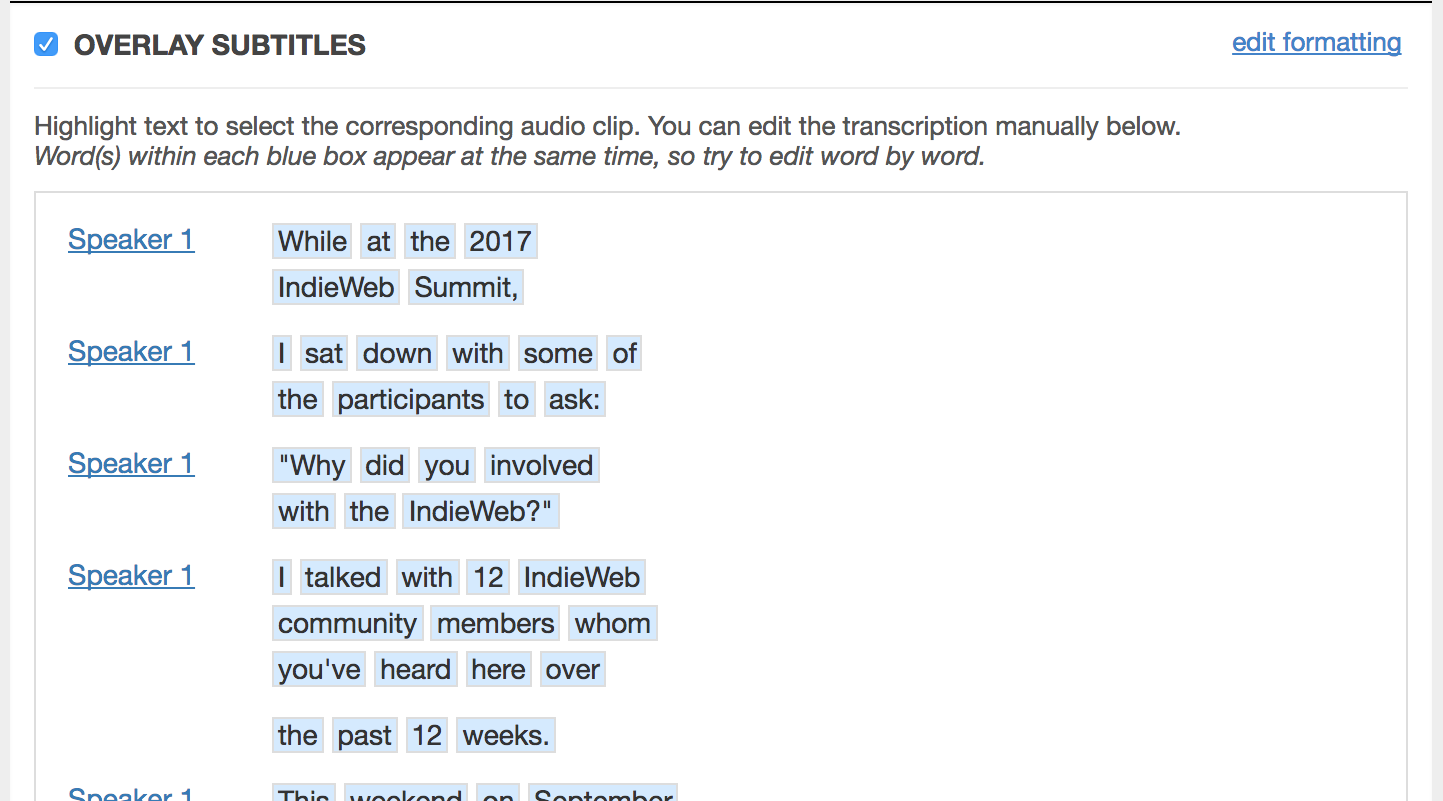

The most important IndieWeb shortcut in my life the last couple of years is the one with which I have posted an animated cat photo to my site every day. In my phone's Photos app I would choose a Live Photo and make sure it's set to Bounce or (less likely) Loop, which will allow it to be shared as a video, then I'd use the Photos app Share function to send it to my Video Micropub shortcut. It would:

- Allow me to trim the video

- Upload the trimmed result to my site via my Micropub Media Endpoint

- Prompt me for a caption for the video and a tag for the post

-

Post it to my site via Micropub where it will show up in few moments.

I've always found the Shortcut construction UI to be fiddly, and in general I find them difficult to debug. However, once a shortcut is working, they usually make my phone an incredibly powerful tool for managing what I share online.

Suddenly iOS 15 broke my Video Micropub with a non-debuggable "there was a problem running the shortcut" error. I've tried common workarounds suggested online, including re-creating the shortcut from scratch multiple times. It chokes at the media upload step every time.

Without this shortcut, I am kind of at a loss for how to post videos from my phone to my site. There are a number of great Micropub clients in the world, but none of them handles video. I've built Micropub clients of my own in the past, but I find it to be a lot of work each time updating things to keep up with changes in specs or library dependencies, and I find that I am not ready to commit to another one at this time.

Bizarrely, I have a different shortcut, probably thanks to Rosemary or Sebastiaan, which does allow me to upload files to my media endpoint, and get the resulting URL.

I combined that with a very quick and very dirty Secret Web Form that allows me to paste in the URL for the uploaded video, creating a Caturday post.

It's certainly more steps, but at least it's doable from my phone once again.

... or it would be if my phone was creating web-compatible videos!!!

For several versions of iOS (maybe starting with 12?), a new system default was introduced that greatly improved the efficiency with which photos and videos are stored on iOS devices. This is done by using newer and more intensive codecs to compress media files: HEIC for images, and HEVC for video. It turns out that these are not codecs commonly available in web browsers. You can work around this by changing a system-wide setting to instead save images and video in the "Most Compatible" formats - JPEG and MP4 (with video encoded as h264).

I'm not sure where the root of this change comes from, but for some of my Live Photos, making them a Loop or Bounce video creates an HEVC video and the only working shortcut I have to uploading them to my site takes them as-is. The result is a caturday post that doesn't play!

So let us arrive at last to the day-saving mentioned in the title of this post.

I'm a big fan of the IndieWeb building block philosophy - simplified systems with distinct sets of responsibilities which can be composed to create complex behaviors using the web as glue. For example, the Micropub spec defines media endpoints with very few required features. The main one: allow a file to be uploaded and to get back a URL where that file will be available on the web.

The spec doesn't say anything about how to handle specifics that might be important. For example, an image on my phone may be a hefty 4 or 5 megabyte pixel party, but I don't want to actually display that whole thing on my website!

I could add some special handling to my media endpoint. For example, have it automatically resize images, save them in multiple formats suitable for different browsers, and more.

But I could also slip a service in the middle that uses the raw uploaded files to create exactly the things I want on demand.

This is where image proxies come in. They are web services that let you ask for a given image on the web, but transformed in some ways.

I have used and loved willnorris/imageproxy as a self-hosted solution. I currently use Cloudinary to handle this on my site today. Go ahead and find a still photo on my site, right-click to inspect and check the image URL!

It turns out that with some setup, Cloudinary will do this for smaller video files as well! This post is getting long, so here are the details:

- Create an "Auto upload URL mapping". This is kind of like a folder alias that maps a path on Cloudinary to a path on your server. In my case, I made one named 'mmmgre' which maps to https://media.martymcgui.re/

- Update your site template to rewrite your video URLs to fetch them from Cloudinary.

That's it!

So, for example, this video is a .mov file uploaded from my phone, encoded in HEVC: https://media.martymcgui.re/0d/93/a0/f1/be676103d289914ba9660cb8ba0eca2cb87a95c801d750ffc00bbfae.mov

The resulting Cloudinary URL is: https://res.cloudinary.com/schmarty/video/upload/vc_h264/mmmgre/0d/93/a0/f1/be676103d289914ba9660cb8ba0eca2cb87a95c801d750ffc00bbfae.mov

Cloudinary supports a lot of transformations in their URLs, which go right between the `video/upload/` bit and the auto-upload folder name (`/mmmgre/...`). In this case the only one needed is `vc_h264` - this tells Cloudinary to make this video encoded with h264 - exactly what I wanted.

And so, at last, I can post videos from my phone to my website again at last. The world of eternal Caturday is safe... for now.

... or it would be if I didn't need an extra step to ensure that my media upload shortcut actually sends the _video_ instead of a `.jpg`! I'm currently using an app called Metapho to extract the video in a goofy way that works for now but this stuff is mad janky y'all.

In the future:

- I'd rather be hosting this myself, but since I'm already using Cloudinary and these Live Photo-based videos are very small, this was a huge win for time-spent-hacking.

- Of course I'd much rather overall just have my friggin' shortcut friggin' working again, sheesh.

-

There has been some chatter in the IndieWeb chat about different approaches to handling video content and posts in Micropub clients. I may join in!