Tweaks Ali Spittel’s face tracking filter demo to include a “snapshot” button that creates an image you can right-click and save.

Next up: can I make this into a Micropub client to post directly to my site?

Tweaks Ali Spittel’s face tracking filter demo to include a “snapshot” button that creates an image you can right-click and save.

Next up: can I make this into a Micropub client to post directly to my site?

I attended IndieWebCamp NYC 2018 and it was a blast! Check the schedule for links to notes and videos from the awesome keynotes, discussion sessions, and build-day demos. I am so grateful to all the other organizers, to all the new and familiar faces that came out, to those that joined us remotely, to Pace University's Seidenberg School for hosting us, and of course to the sponsors that made it all possible.

I have a lot of thoughts about all the discussions and projects that were talked about, I'm sure. But for now, I'd like to capture some of the TODOs and project ideas that I came away with after the event, and the post-event discussions over food and drink.

More generally: I think there's a really cool future where IndieWeb building blocks are available on free services like Glitch and Neocities. New folks should be able to register a domain and plug them together in an afternoon, with no coding, and get a website that supports posting all kinds of content and social interactions. All for the cost of a domain! And all with the ability to download their content and take it with them if these services change or they outgrow them. I already built some of this as a goof. The big challenges are simplifying the UX and documenting all of the steps to show folks what they will get and how to get it.

Other fun / ridiculous ideas discussed over the weekend:

I am sure there are fun ideas that were discussed that I am leaving out. If you can think of any, let me know!

Excited for another IWC NYC. This one looks to be big! Join us Sept 28-29 in NYC!

This is a write-up of my Sunday hack day project from IndieWebCamp NYC 2017!

You can see my portion of the IWC NYC demos here.

Feel free to skip this intro if you are just here for the HTML how-to!

I've been doing a short ~10 minute podcast about the IndieWeb community since February, an audio edition of the This Week in the IndieWeb weekly newsletter cleverly titled This Week in the IndieWeb Audio Edition.

After the 2017 IndieWeb Summit, each episode of the podcast also featured a brief ~1 minute interview with one of the participants there. As a way of highlighting these interviews outside the podcast itself, I became interested in the idea of "audiograms" – videos that are primarily audio content for sharing on platforms like Twitter and Facebook. I wrote up my first steps into audiograms using WNYC's audiogram generator.

While these audiograms were able to show visually interesting dynamic elements like waveforms or graphic equalizer data, I thought it would be more interesting to include subtitles from the interviews in the videos. I learned that Facebook supports captions in a common format called SRT. However, Twitter's video offerings have no support for captions.

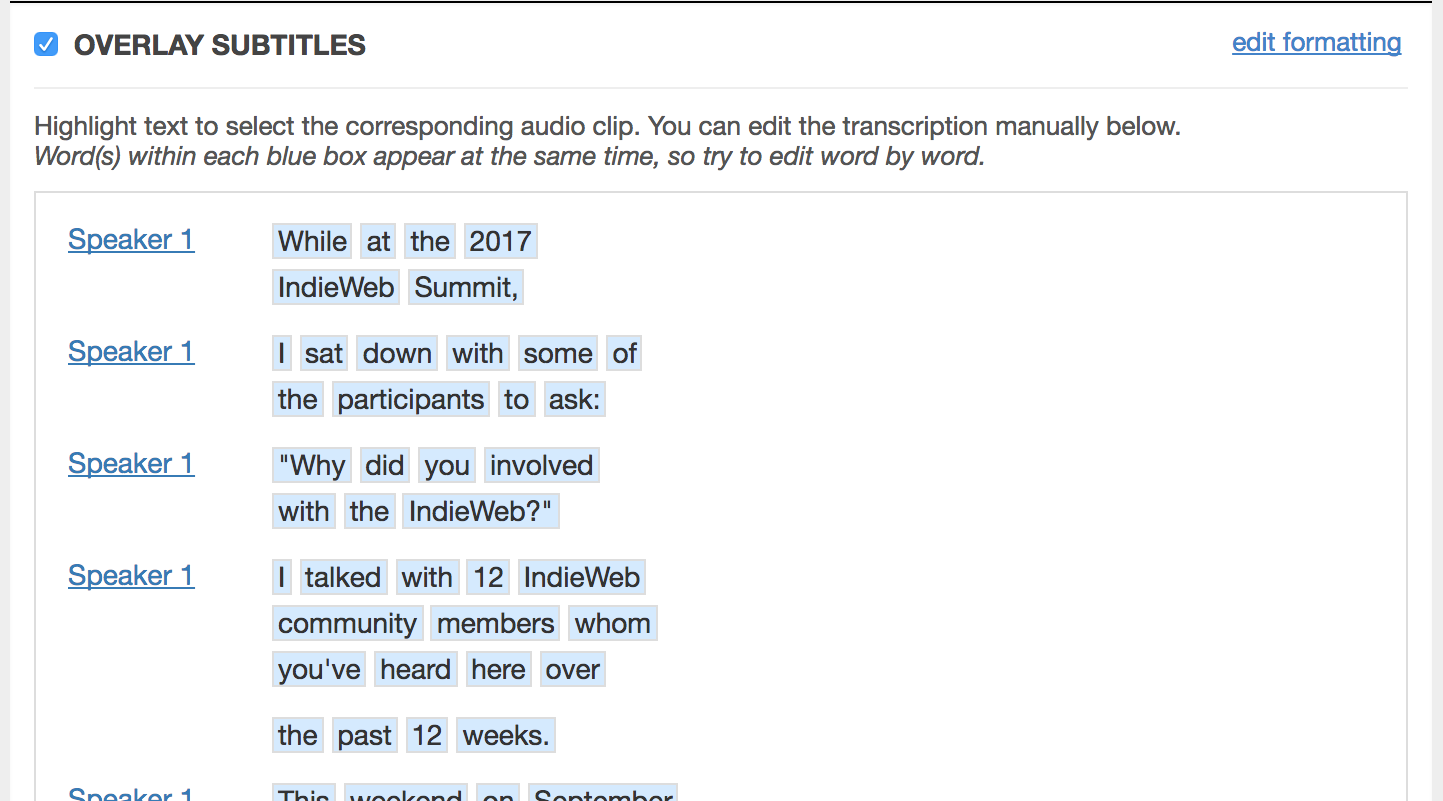

Thankfully, I discovered the BBC's open source fork of audiogram, which supports subtitles and captioning, including the ability to "bake in" subtitles by encoding the words directly into the video frames. It also relies heavily on BBC web infrastructure, and required quite a bit of hacking up to work with what I had available.

In the end, my process looked like this:

You can see an early example here. I liked these posts and found them easy to post to my site as well as Facebook, Twitter, Mastodon, etc. Over time I evolved them a bit to include more info about the interviewee. Here's a later example.

One thing that has stuck with me is the idea that Facebook could be displaying these subtitles, if only I was exporting them in the SRT format. Additionally, I had done some research into subtitles for HTML5 video with WebVTT and the <track> element and wondered if it could work for audio content with some "tricks".

Let's skip to the end and see what we're talking about. I wanted to make a version of my podcast where the entire ~10 minutes could be listened to along with timed subtitles, without creating a 10-minute long video. And I did!

Here is a sample from my example post of an audio track inside an HTML5 <video> element with a subtitle track. You will probably have to click the "CC" button to enable the captioning

How does it work? Well, browsers aren't actually too picky about the data types of the <source> elements inside. You can absolutely give them an audio source.

Add in a poster attribute to the <video> element, and you can give the appearance of a "real" video.

And finally, add in the <source> element with your subtitle track and you are good to go.

The relevant source for my example post looks something like this:

<video controls poster="poster.png" crossorigin="anonymous" style="width: 100%" src="audio.mp3"> <source class="u-audio" type="audio/mpeg" src="audio.mp3"> <track label="English" kind="subtitles" srclang="en" src="https://media.martymcgui.re/.../subtitles.vtt"> </video>

So, basically:

But is that the whole story? Sadly, no.

In some ways, This Week in the IndieWeb Audio Edition is perfectly suited for automated captioning. In order to keep it short, I spend a good amount of time summarizing the newsletter into a concise script, which I read almost verbatim. I typically end up including the transcript when I post the podcast, hidden inside a <details> element.

This script can be fed into gentle, along with the audio, to find all the alignments - but then I have a bunch of JSON data that is not particularly useful to the browser or even Facebook's player.

Thankfully, as I mentioned above, the BBC audiogram generator can output a Facebook-flavored SRT file, and that is pretty close.

After reading into the pretty expressive WebVTT spec, playing with an SRT to WebVTT converter tool, and finding an in-browser WebVTT validator, I found a pretty quick way of converting those in my favorite text editor which basically boils down to changing something like this:

00:00:02,24 --> 00:00:04,77 While at the 2017 IndieWeb Summit, 00:00:04,84 --> 00:00:07,07 I sat down with some of the participants to ask:

Into this:

WEBVTT 00:00:02.240 --> 00:00:04.770 While at the 2017 IndieWeb Summit, 00:00:04.840 --> 00:00:07.070 I sat down with some of the participants to ask:

Yep. When stripped down to the minimum, the only real differences in these formats is the time format. Decimals delimit subsecond time offsets (instead of commas), and three digits of precision instead of two. Ha!

If you've been following the podcast, you may have noticed that I have not started doing this for every episode.

The primary reason is that the BBC audiogram tool becomes verrrrry sluggish when working with a 10-minute long transcript. Editing the timings for my test post took the better part of an hour before I had an SRT file I was happy with. I think I could streamline the process by editing the existing text transcript into "caption-sized" chunks, and write a bit of code that will use the pre-chunked text file and the word-timings from gentle to directly create SRT and WebVTT files.

Additionally, I'd like to make these tools more widely available to other folks. My current workflow to get gentle's output into the BBC audiogram tool is an ugly hack, but I believe I could make it as "easy" as making sure that gentle is running in the background when you run the audiogram generator.

Beyond the technical aspects, I am excited about this as a way to add extra visual interest to, and potentially increase listener comprehension for, these short audio posts. There are folks doing lots of interesting things with audio, such as the folks at Gretta, who are doing "live transcripts" with a sort of dual navigation mode where you can click on a paragraph to jump the audio around and click on the audio timeline and the transcript highlights the right spot. Here's an example of what I mean.

I don't know what I'll end up doing with this next, but I'm interested in feedback! Let me know what you think!

Hey Folks in/near NYC! @IndieWebCamp NYC is just 5 days away, 9/30 - 10/1

Last year’s IWC NYC was my first in-person IndieWeb experience, and I was completely caught up by the thoughtful people working first-hand to build a more personal, more social web; a web where your content, identity, and interactions are yours, instead of food for surveillance-powered ad-engines like Facebook.

Since then, I’ve started a Homebrew Website Club in Baltimore, a weekly IndieWeb Podcast, made tons of improvements to my site, and even created some IndieWeb tools, like a micropub media endpoint for storing photos, video, audio, and more, a tool for posting events to your own site, and a tool for posting audio, such as podcasts.

So come on out for two days of participatory discussions, user experience design, and face-to-face help improving our personal websites and the future of the IndieWeb!

I’ll be recording interviews for the This Week in the IndieWeb Podcast, if you’re interested in sharing your IndieWeb journey and thoughts.

There will also be some amazing people to meet, like IndieWeb co-founder Tantek Çelik, IndieWeb WordPress developer David Shanske, awesome designer Hannah Donovan, and many more!

Registration is super-affordable! Free if you have your own personal site! https://2017.indieweb.org/nyc.

Hope to see you there!